使用kubeadm搭建多master生产环境集群

前言

kubeadm是官方提供的开源工具,是开源项目,源码托管在github上。kubeadm可以快速搭建k8s集群,是目前官方推荐的一个方式。kubeadm init和kubeadm join可以快速搭建k8s集群,并且可以实现k8s集群的扩容。

kubeadm初始化k8s的时候,所有组件都是以pod形式运行的。具备故障自恢复能力。

一、安装环境准备

1.1、实验环境:

| 角色 | 主机名 | IP | 组件 |

| 控制节点 | master01 | 10.2.4.230 | apiserver、controller-manager、scheduler、etcd、calico、nginx、keepalived |

| 控制节点 | master02 | 10.2.4.231 | apiserver、controller-manager、scheduler、etcd、calico、nginx、keepalived |

| 工作节点 | node01 | 10.2.4.233 | kubelet、kube-proxy、coredns、docker、calico |

| VIP | 10.2.4.249 |

1.2、配置每一个节点的主机名

master01

hostnamectl set-hostname master01

master02

hostnamectl set-hostname master02node01

hostnamectl set-hostname node011.3、配置每一个节点的hosts文件

所有节点相同,增加如下内容

cat<<EOF>> /etc/hosts

10.2.4.230 master01

10.2.4.231 master02

10.2.4.233 node01

EOF1.4、配置节点之间的无密码登陆

节点机都是一样的操作

#生成密钥,一路回车

ssh-keygen

#生成的公钥拷贝到其他节点机

ssh-copy-id master01

ssh-copy-id master02

ssh-copy-id node011.5、关闭swap交换分区,提升性能

结点即都是一样的操作

swapoff -a还需要注释掉fstab文件的swap挂载配置,我此处没有swap分区,所以此处不需要

1.6、修改内核参数

节点机都是一样的操作

modprobe br_netfilter

cat<<EOF>> /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

使内核生效

sysctl -p /etc/sysctl.d/k8s.conf1.7、关闭firewalld防火墙和selinux

所有节点机相同操作

systemctl stop firewalld

systemctl disable firewalld

sed -i '/SELINUX/s/enforcing/disabled/g' /etc/selinux/config

setenforce 01.8、配置yum源

所有节点机相同操作

mkdir bak

mv /etc/yum.repos.d/* bak/

curl http://mirrors.aliyun.com/repo/Centos-7.repo -o /etc/yum.repos.d/Centos-Base.repo

curl http://mirrors.aliyun.com/repo/epel-7.repo -o /etc/yum.repos.d/epel-7.repo

curl http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -o /etc/yum.repos.d/docker-ce.repo

cat<<EOF>>/etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

EOF

1.9、配置时间同步服务

三台节点相同操作

yum install ntpdate -y

ntpdate cn.pool.ntp.org做个定时任务,时间同步

crontab -e

* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org时间同步可以使用ntp服务,也可以使用chronyd服务,还可以使用定时任务同步

1.10、开启ipvs

每台节点都要执行

kube-proxy支持iptables和ipvs两种模式

ks1.8出来的ipvs,k8s1.11版本稳定

k8s1.1使用iptables

ipvs和iptables都是基于netfilter实现的

ipvs采用是hash表,iptables

区别:

- ipvs是为大型集群提供了更好的可扩展性和性能

- ipvs支持比iptables更复杂的负载均衡算法

- ipvs支持服务器健康检查和连接重试等功能

上传ipvs模块到modules下面

下载方式

wget https://pan.cnbugs.com/k8s/ipvs.modules

mv ipvs.modules /lib/modules

cd /lib/modules增加执行权限

[root@node01 modules]# chmod 777 ipvs.modules [root@node01 modules]# cat ipvs.modules

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in ${ipvs_modules}; do

/sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1

if [ 0 -eq 0 ]; then

/sbin/modprobe ${kernel_module}

fi

done

加载ipvs模块

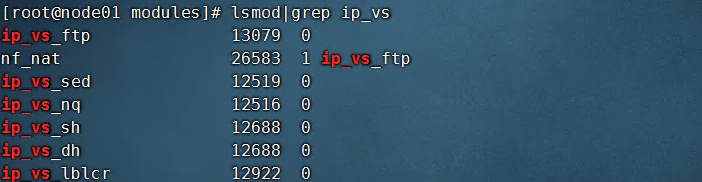

bash ipvs.modules查看是否加载成功

1.11、安装基础软件包

每台节点都要安装

yum install -y ipvsadm unzip wget yum-utils net-tools gcc gcc-c++ zlib zlib-devel pcre pcre-devel nfs nfs-utils iptables-services安装完成之后还需要关掉iptables服务

systemctl stop iptables && systemctl disable iptables

iptables -F1.12、安装docker

每台节点都要操作

yum install docker-ce-20.10.6 docker-ce-cli-20.10.6 containerd.io -y启动docker

systemctl start docker && systemctl enable docker配置镜像加速器

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://vbe25vg3.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker二、使用kubeadm安装k8s集群

2.1、安装初始化k8s需要的软件包

kubeadm:官方的一个安装k8s的工具,kubeadm init,kubeadm join

kubelet:是启动删除pod需要用到的服务

kubectl:操作k8s资源的,创建资源、删除资源、修改资源

kubectl -->kube.config

每个节点都要安装

yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6 -y设置kubelet的自启动

systemctl enable kubelet2.2、配置keepalived和nginx实现k8s apiserver节点高可用

master节点安装keepalived和nginx

yum install nginx keepalived -y

修改nginx配置文件如下

[root@master01 ~]# sed -i /^$/d /etc/nginx/nginx.conf

[root@master01 ~]# sed -i /^#/d /etc/nginx/nginx.conf

[root@master01 ~]# cat /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

# 四层负载均衡,为两台Master apiserver组件提供负载均衡

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 10.2.4.230:6443; # Master1 APISERVER IP:PORT

server 10.2.4.232:6443; # Master2 APISERVER IP:PORT

}

server {

listen 16443; # 由于nginx与master节点复用,这个监听端口不能是6443,否则会冲突

proxy_pass k8s-apiserver;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

server {

listen 80 default_server;

server_name _;

location / {

}

}

}

配置keepalived

[root@master01 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface eth0 # 修改为实际网卡名

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟IP

virtual_ipaddress {

10.2.4.249/24

}

track_script {

check_nginx

}

}

#vrrp_script:指定检查nginx工作状态脚本(根据nginx状态判断是否故障转移)

#virtual_ipaddress:虚拟IP(VIP)

创建nginx检测脚本

vim /etc/keepalived/check_nginx.sh

#!/bin/bash

count=$(ps -ef |grep nginx | grep sbin | egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

systemctl stop keepalived

fi

给检测脚本执行权限

chmod +x /etc/keepalived/check_nginx.sh 启动nginx服务

systemctl daemon-reload

systemctl start nginx && systemctl enable nginx启动nginx的时候会报找不到stream模块,需要安装下模块即可解决,安装方法

yum -y install nginx-all-modules.noarch启动keepalived服务

systemctl start keepalived

systemctl enable keepalived

kubeadm初始化k8s集群

在master01上创建kubeadm-config.yaml文件

[root@master01 ~]# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.20.6

controlPlaneEndpoint: 10.2.4.249:16443

imageRepository: registry.aliyuncs.com/google_containers

apiServer:

certSANs:

- 10.2.4.230

- 10.2.4.232

- 10.2.4.233

- 10.2.4.249

networking:

podSubnet: 10.244.0.0/16

serviceSubnet: 10.10.0.0/16

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

执行初始化

kubeadm init --config kubeadm-config.yaml 没有报错表示初始化成功

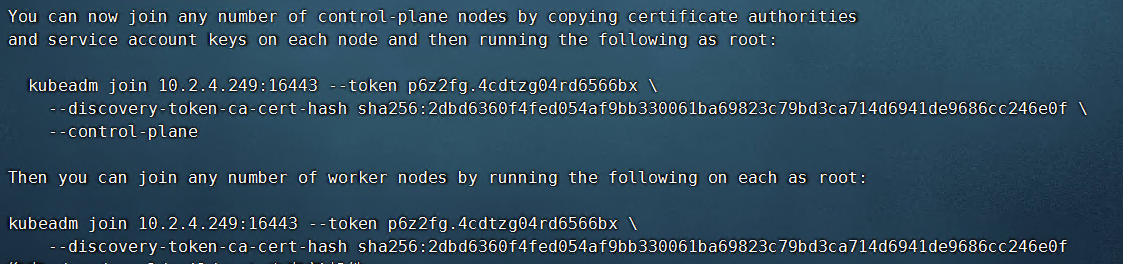

记录下面的命令,在增加节点的时候使用

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 10.2.4.249:16443 --token 6zarlb.4dfv73p0oltlpcqp \

--discovery-token-ca-cert-hash sha256:be54e77d6fc8db6385e8f7c2f8d55503c63e6c9db9a741ab24ee7e420a621d45 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.2.4.249:16443 --token 6zarlb.4dfv73p0oltlpcqp \

--discovery-token-ca-cert-hash sha256:be54e77d6fc8db6385e8f7c2f8d55503c63e6c9db9a741ab24ee7e420a621d45

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

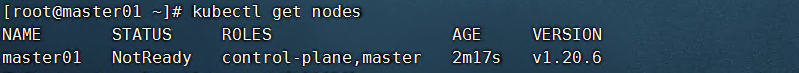

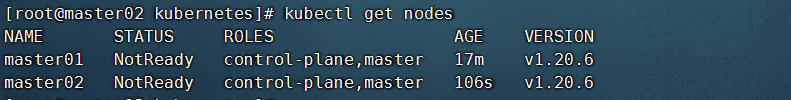

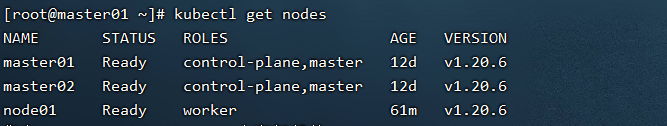

查看节点

扩容master节点

创建证书目录

[root@master02 ~]# mkdir -p /etc/kubernetes/pki/etcd && mkdir -p ~/.kube

拷贝证书到master02

[root@master01 ~]# scp /etc/kubernetes/pki/ca.crt master02:/etc/kubernetes/pki/

ca.crt 100% 1066 48.5KB/s 00:00

[root@master01 ~]# scp /etc/kubernetes/pki/ca.key master02:/etc/kubernetes/pki/

ca.key 100% 1679 91.9KB/s 00:00

[root@master01 ~]# scp /etc/kubernetes/pki/sa.key master02:/etc/kubernetes/pki/

sa.key 100% 1679 68.2KB/s 00:00

您在 /var/spool/mail/root 中有新邮件

[root@master01 ~]# scp /etc/kubernetes/pki/sa.pub master02:/etc/kubernetes/pki/

sa.pub 100% 451 25.8KB/s 00:00

[root@master01 ~]# scp /etc/kubernetes/pki/front-proxy-ca.crt master02:/etc/kubernetes/pki/

front-proxy-ca.crt 100% 1078 121.3KB/s 00:00

[root@master01 ~]# scp /etc/kubernetes/pki/front-proxy-ca.key master02:/etc/kubernetes/pki/

front-proxy-ca.key 100% 1679 115.3KB/s 00:00

[root@master01 ~]# scp /etc/kubernetes/pki/etcd/ca.crt master02:/etc/kubernetes/pki/etcd/

ca.crt 100% 1058 57.7KB/s 00:00

[root@master01 ~]# scp /etc/kubernetes/pki/etcd/ca.key master02:/etc/kubernetes/pki/etcd/

ca.key 创建打印加入集群命令(防止24小时过期)

[root@master01 ~]# kubeadm token create --print-join-command

kubeadm join 10.2.4.249:16443 --token w499dn.bbco46w4usl9pwh1 --discovery-token-ca-cert-hash sha256:be54e77d6fc8db6385e8f7c2f8d55503c63e6c9db9a741ab24ee7e420a621d45 加入master02到集群中

[root@master02 ~]# kubeadm join 10.2.4.249:16443 --token w499dn.bbco46w4usl9pwh1 --discovery-token-ca-cert-hash sha256:be54e77d6fc8db6385e8f7c2f8d55503c63e6c9db9a741ab24ee7e420a621d45 --control-plane没有报错执行成功

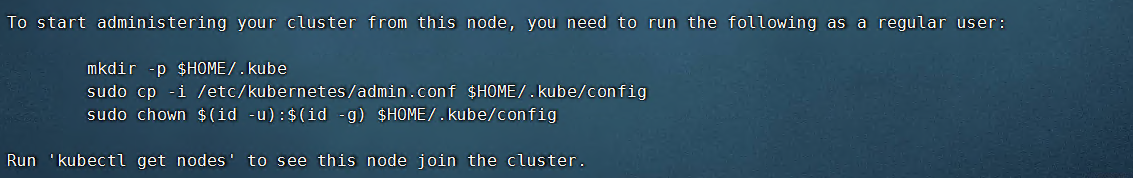

继续执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

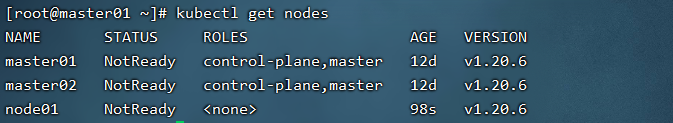

查看节点信息

可以看到成功把master02节点加入到了集群中了

扩容worker节点

首先查看加入worker节点的命令

[root@master01 ~]# kubeadm token create --print-join-command

kubeadm join 10.2.4.249:16443 --token uwuzbn.5uugojkgvoqjth6n --discovery-token-ca-cert-hash sha256:be54e77d6fc8db6385e8f7c2f8d55503c63e6c9db9a741ab24ee7e420a621d45在扩容的worker节点执行

[root@node01 ~]# kubeadm join 10.2.4.249:16443 --token uwuzbn.5uugojkgvoqjth6n --discovery-token-ca-cert-hash sha256:be54e77d6fc8db6385e8f7c2f8d55503c63e6c9db9a741ab24ee7e420a621d45

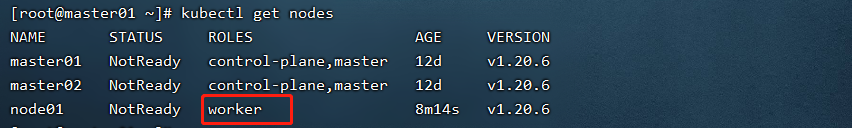

修改节点标签

[root@master01 ~]# kubectl label node node01 node-role.kubernetes.io/worker=worker

node/node01 labeled

再次查看

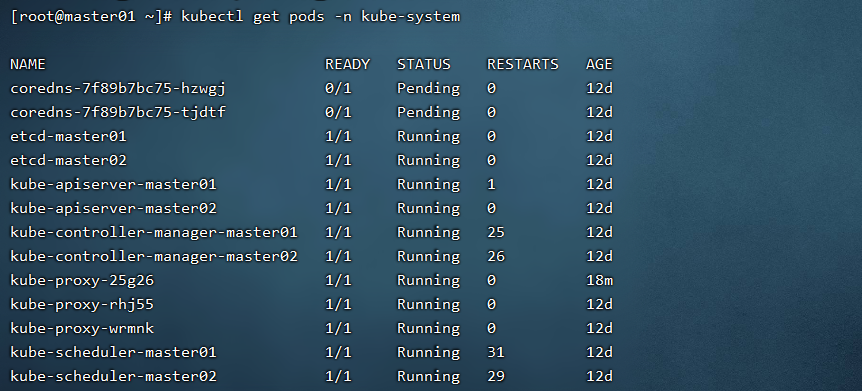

查看安装完成的组件

备注:coredns是pending状态是正常的,因为还没有安装网络插件

安装kubernetes网络组件calico

calico:可以做网络策略、可以提供IP

假如我们在k8s的不同的名称空间创建pod

calico.yaml

安装calico组件

[root@master01 ~]# kubectl apply -f calico.yaml 再次查看node状态

全部变为running状态了

至此,kubeadm安装多master的k8s集群安装完成!

本文所需要的文件请移步至QQ群下载!